By Luke Fitzgerald on 7 Nov 2019

Google has just rolled out the most significant single change to the way organic search works since RankBrain hit our screens back in 2015, one they say will impact up to 10% or all search queries, so it’s no surprise the SEO community is clamouring to make sense of it all.

Fear not however, the good news is that if you’ve been doing SEO and content the right way all along, this can only be positive news for your brand, and if you’ve fallen victim to a traffic drop, we’ve got a couple of tips to help turn things around.

What’s all this BERT jazz?

Bidirectional Encoder Representations from Transformers (if you say it quickly three times in front of a mirror, Matt Cutts appears in your bedroom) or the new acronym ‘BERT’ is essentially Big G’s latest AI deployment geared towards understanding searches better than before as explained in their update release notes.

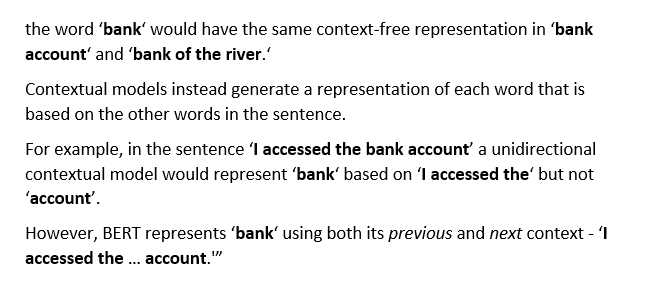

The fundamental concept behind BERT is Natural Language Processing (NLP), which is nothing new and has been used by tech companies over the past decade in many marketing contexts such as keyword research, clickbait filtering and news content syndication to name but a few. It’s the bidirectional training of bots is what makes BERT unique, meaning the AI can now analysis preceding and suceeding words en masse, in an instant, which is potentially game changing for those who make a living from words on the internet.

To understand this bidirectional concept is to understand what BERT’s all about, with this example from Google summing it up nicely:

Still confused? Please don't let the complexity of this jargon bamboozle you!

Following a series of iterations and tests over the past couple of years, Google have now taken the next step to upgrade conversational search - powered largely by the Trojan horses we’ve allowed into our homes, workplaces and cars - by pushing a new transformer-based model that lives within the search algorithm.

The temptation to include all sorts of nerdy Transformers, Sesame Street and Trap Door GIFs is strong with this one.. must resist..

Transformers, within the context of the BERT algorithm, allow Google to better understand the nuances of how a question is asked by a searcher, considering the sentence in its entirety, the words before and after the main keywords and how the words relate to each other so they can deliver more relevant results based on searcher-intent.

The aim of transformers is essentially to read a sentence and create word embeddings (mapping a word to a vector) and within this algorithmic evaluation, try to:

- Assign a weight and meaning to each word

- Mask some of the words discovered

- Try to guess what could potentially come before and after each word

- Run variants of each potential iteration a couple of times

- Provide a coherant understanding of the relationship between all known words

- Get an understanding of the relationship between full sentences

To better understand what all of this means, here’s a couple of examples taken directly from the Google blogpost cited above.

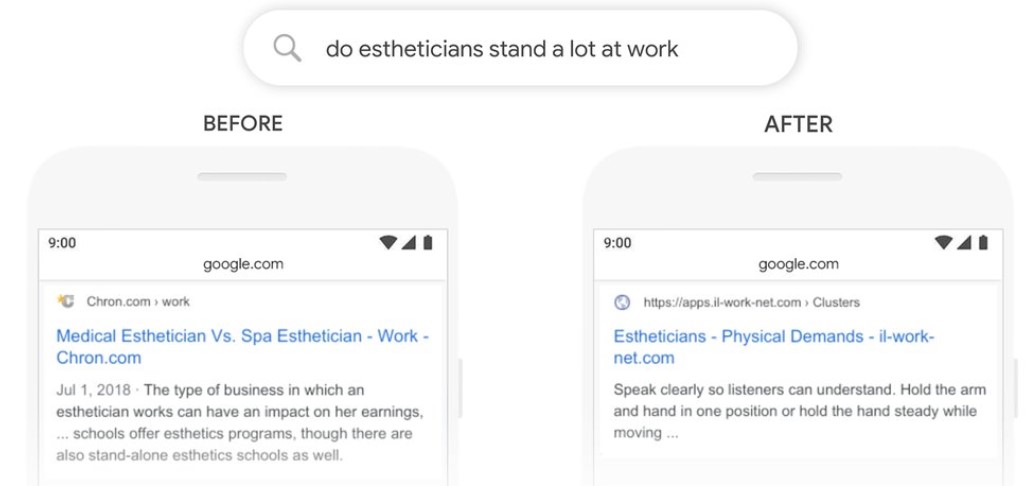

Analysing the query “do estheticians stand a lot at work” - previously, Google’s systems were taking an approach of matching keywords, matching the term “stand-alone” in the result with the word “stand” in the query. But that isn’t the right use of the word “stand” in context. Their BERT models, on the other hand, understand that “stand” is related to the concept of the physical demands of a job, and displays a more useful response:

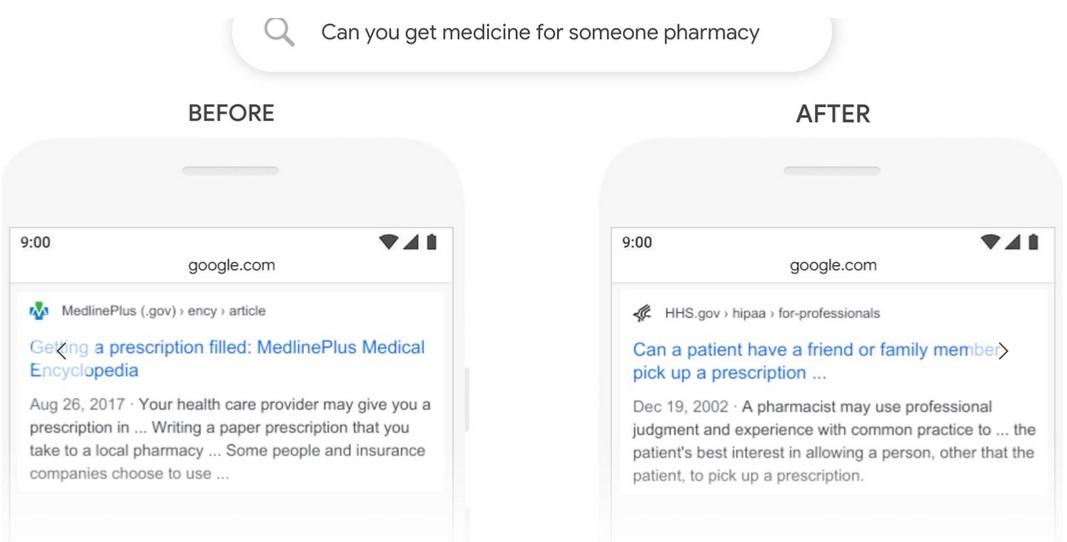

Another great example where BERT has helped the Google search algo grasp the subtle nuances of language that computers don’t quite understand the way humans do, is the following:

Note the publication date on the ‘after’ version above; Google has (rightly) given priority to a 17-year old piece of indexed content which more accurately answers the question in the given content than the ‘fresher’ one published in 2017 that had previously been the top result without really answering the question properly. This is an important observation and one that underscores the importance of creating good ol’ fashioned, honest, useful content that answers your users’ questions, something our content team have been hammering home for years via robust content auditing and planning.

It’s also worth noting here that each of the specific examples provided by Google to date relate to long-tail, informational search queries. We’ve yet to see any major impact on the high-priority keywords we rank-track for our clients, meaning the estimated 10% impact we’re expecting here, isn’t as likely to impact shorter or commercial queries, simply due to there being fewer words for the BERT’s transformer to work its magic on. The majority of which previous Hummingbird and RankBrain algo iterations would have already nailed down their understanding around – this is all about the longtail, the conversational and the not-quite-so-valuable-just-yet.

First Hummingbird, then RankBrain, now BERT

The best way to view BERT is simply as a continuation or upgrade on Google’s continual effort to improve semantic search that has its genesis back in 2012 with the release of the Hummingbird algorithm, enabling it to better understand the meaning of individual words within a query by improving their AI’s neural mapping of entities within the knowledge graph to provide answers, not just a list of ten blue links.

With RankBrain’s emergence in 2015, the search engine giant came to better understand longer and more complex queries and process negative match keywords better in real-time based on user SERP interactions.

Now that BERT has been released into the wild, Google’s AI is now starting to comprehend not only strings of individual words and their meaning(s), but also far more about what the underlying intent of the query is when a combination of words is used in a specific way. This is huge, but it doesn’t quite revolutionise what we, as SEOs and contentment marketers, are actually doing on a day-to-day basis.

If you want to get into the weeds on the technicalities of BERT, then we’d strongly recommend you load up on your stimulant of choice and set aside half an hour and dive into this comprehensive guide from Dawn Anderson on the SearchEngineLand site entitled FAQ: All about the BERT algorithm in Google search – it’s not for the feint--hearted, but a real deep five for those curious on the mechanics behind such sweeping moves by Google’s AI division.

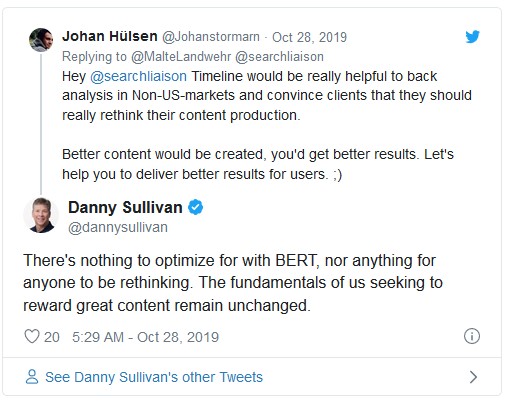

Can we optimise for BERT?

In short, no, not really!

The fundamental principles of Technology, Content Relevance and Authority are still what’ll ultimately dictate how ‘SEO-friendly’ a website is and, powered by a fast, smooth UX, informative, engaging content, Penguin-friendly link building and the user-based SERP engagement metrics still being tallied by the millisecond under RankBrain’s watch, our job of driving sustained organic traffic remains the same.

If you’ve been paying close attention to how conversational search has been evolving over the past decade, creating great content that answers your users questions in a well-formatted, well-researched manner in-line with the ‘EAT’ principles of Expertise, Authoritativeness and Trustworthiness, then your site has nothing to worry about with the rollout of BERT, or any other quality-based algorithm Google chooses to bestow upon us, for that matter.

Website who see progressive, cumulative gains on the back of this update can rest assured that what they’re doing is having the desired impact, their SEO plan is working; those who see organic traffic uplifts on the back BERT are simply doing a better job of answering whatever that question a searcher asked than their competitors are.

Conversely, those who see traffic losses from such updates need to take a look in the mirror and see what can be done to improve how well they’re answering questions in line with searcher intent.

How to investigate whether BERT has negatively impacted your site’s traffic

It’s worth understanding that if you have lost traffic, you’ve very likely dropped ranking positions because you should never have ever really ranked well for that query in the first place and Google is now just ‘correcting’ the SERPs in line with their new, more informed version of reality.

A good first step towards understanding why your site may have taken a hit with this update is by using Google Search Console and looking at each query that your rankings have decreased for. We’re never overly concerned about the ‘Google Dance’ on a couple of specific keywords, but if you’re seeing sustained, cumulative declines across a number of high-value terms, this is a good starting point.

In line with the principles of this quality update, your site should have only dropped significantly for a query if BERT has deemed your previously-ranking search result hasn’t adequately answered what the user is asking.

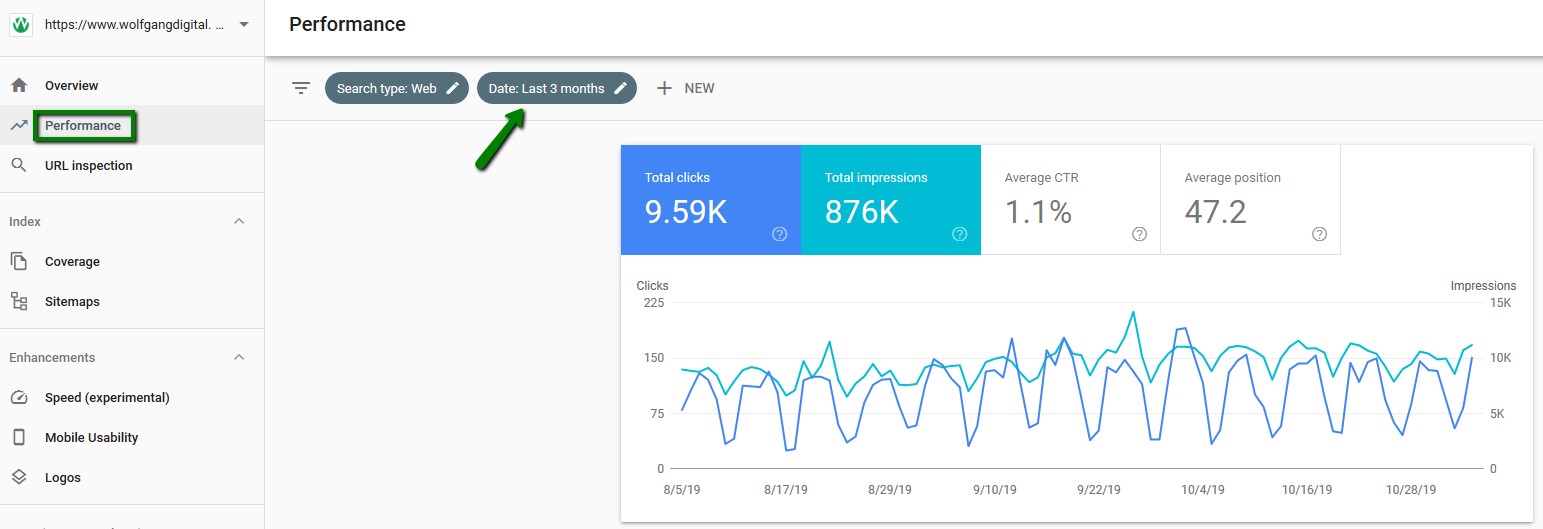

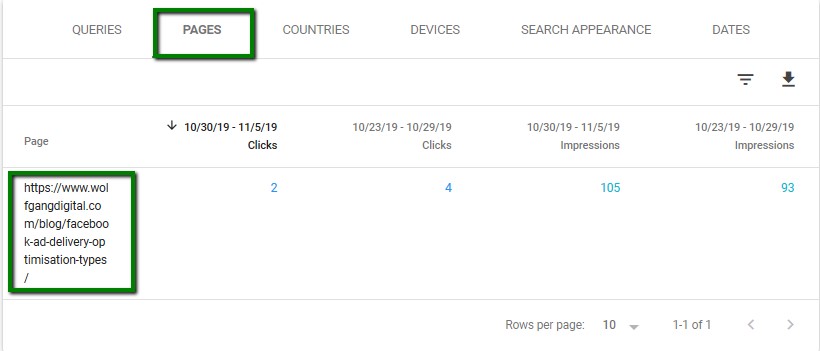

As the update is hot-off-the-shelves and we haven’t seen any major impact (positive or negative) this side of the Atlantic from it just yet here is an example of what the process for uncovering ranking-related traffic declines in Google Search Console might look like, using the Wolfgang site’s GSC dashboard for illustration:

First, head towards the performance report in GSC and adjust the date filter:

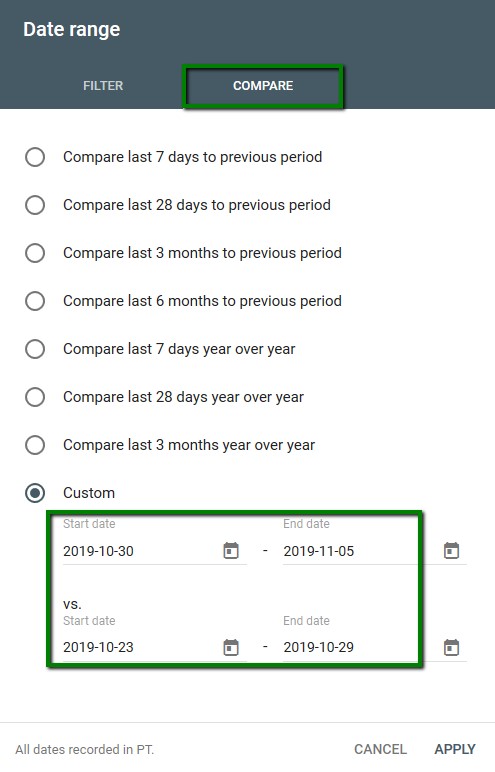

BERT has only been rolling over the past fortnight, so we’ll compare the most recent week (Wednesday-Tuesday) with the same period the previous week.

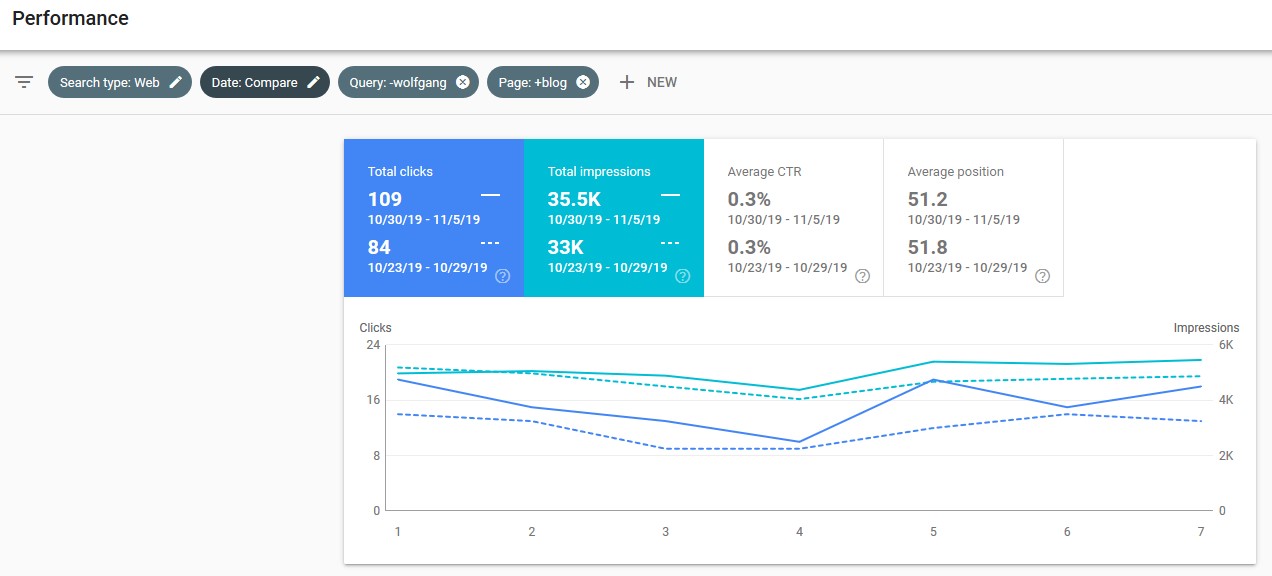

Now, we want to see how much generic organic traffic (by filtering Query: -wolfgang) we’ve had to our blog (by filtering Page: +blog) has dropped week-on-week:

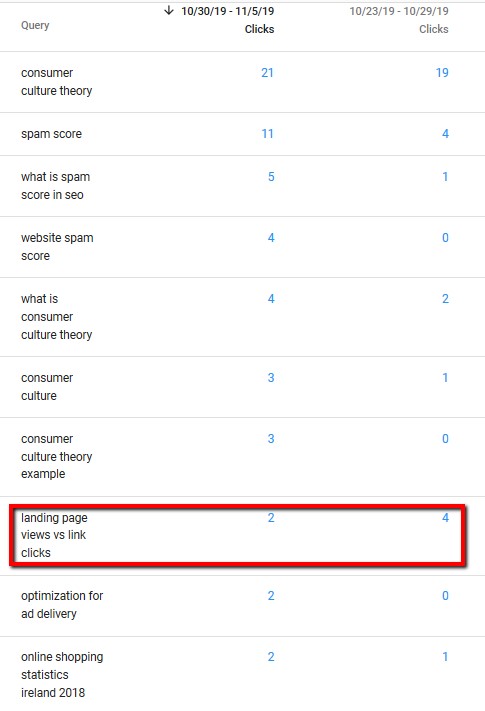

Looking at the above, it’s clear we’ve benefitted greatly from BERT (yippee!) with only 1 of our top 10 click-driving organic keywords seeing a decline versus the pre-BERT rollout

But, remember, for the purpose of this exercise, we’re trying to identify longer-tail informational queries among the negative movers, so let’s focus on that declining term above ‘landing page views vs link clicks’ which has only 2 clicks versus 4 previously (low volumes, but a 50% drop nonetheless).

So, if we click on that query from the list, we’re taken through to a section which isolates that query and by clicking on ‘Page’, we can see the page which had 50% less traffic than the week before, in this case it’s https://www.wolfgangdigital.com/blog/facebook-ad-delivery-optimisation-types/

The next step, if this were a real exercise, would be to think about ways we can improve that page so that it better answers the searchers’ query.

There’s no rocket science here; it's just good, solid SEO and content coming into play more than ever. Consider all the normal optimisations we can call upon as SEO professionals, like the contextual internal link I’ve just given the page above and standard on-page SEO best practices like having the question itself within a header tag and keeping your answer to the query to the point and located prominently within your page copy, etc.

If you’d like to expand on your answer even further or restructure it with featured snippets in mind, only consider that once you’ve satisfied that you’ve succinctly answered the question itself as clearly as possible - with primarily users (but also these omnipresent fancily-acronymically-named AI bots) in mind at all times!

The Wolfgang Takeaway

While it’d be remiss of us not to cover this significant new algorithm update in detail here on the Wolfgang Blog, we’re confident that it’s an ally to all good digital marketers and like any algo update, a foe to those who’ve been flying a little too close to the sun on Google’s search quality guidelines, or ignoring them entirely.

Feed BERT, and BERT will reward you.

One small step for AI-kind, BERT is one giant leap towards Google’s ultimate goal of understanding the infinitely complex nature of human language and personal intent behind each query it processes by the 5.6 billion each and every day.

The concepts driving BERT forward shouldn’t come as any real surprise to anyone who’s studied the organisation and watched the search ecosystem evolve over the past two decades. Google have made huge inroads on their next big challenge of achieving higher keyword accuracy for voice search and better conversational, linguistic intelligence to avoid user frustration and ultimately, monetise the fruits of BERT’s AI which we’ve all helped them methodically fine-tune with our reaction to and interaction with each and every search query over the years!

We’re hugely excited about the future evolution of BERT and all of Google’s AIO-powered automation endeavours. The robot revolution has well-and-truly arrived and it’ll be interesting to see some real-world impacts of this algorithm update unfolding on our screens and on our voice-enabled devices in due course.

If you have any thoughts you’d like to share around BERT, or have any search-related questions you’d like us to help your business explore feel free to comment below or give the best SEO team in the world a shout today!

.png)

.png)

_2025.png)